Introduction

Apache Kafka, one of the world’s most popular solutions for streaming massive amounts of data, continues to grow in popularity. At the recent NonStop TBC, Infrasoft saw this in sharp focus, with a number of customers and HPE NonStop partners talking to us about potential NonStop-Kafka use cases.

This article will give a summary of the capabilities, and potential use cases, for uLinga for Kafka – the best solution for integrating your HPE NonStop data and applications with Kafka.

Kafka usage continues to grow at pace – Kafka is now in use at more than 80% of the Fortune 100, and has big name users including Barclays, Goldman Sachs, Paypal, Square, Target, and others.1

But what is Kafka? From the Apache Kafka website: Apache Kafka is an open-source distributed event streaming platform used by thousands of companies for high-performance data pipelines, streaming analytics, data integration, and mission-critical applications.2

Those sound a lot like environments where many HPE NonStop Servers also play a part, doesn’t it? Given that, it makes sense to have a good idea of how your NonStop applications and data can integrate with, and take advantage of, the capabilities of Kafka.

I recently came across this excellent animation on LinkedIn from Brij Kishore Pandey which shows the Top 5 current Kafka Use Cases. As these are relevant to NonStop users I thought it would be a good place to start, for this “Best of NonStop” edition of the Connection. In this article we will take a close look at the first 3 use cases in this animation.

uLinga for Kafka provides native integration with the Kafka cluster, ensuring the best performance and reliability of any NonStop Kafka integration solution.

Data Streaming

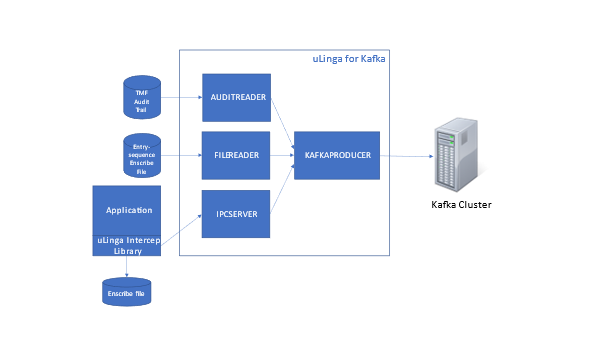

The first use case, Data Streaming, is fairly straightforward. This sees applications sending, or streaming, relevant aspects of the data they are processing to Kafka, for storage and forwarding to other applications/platforms. On the NonStop, uLinga for Kafka (ULK) can facilitate application data streaming in a number of ways.

The simplest option is where ULK directly reads data from application logs or audit trails. uLinga for Kafka can read from entry-sequenced Enscribe files, and from TMF audit trails. It can be configured to monitor one or many files (or SQL tables), and automatically forwards updates to those files/tables to Kafka. If your application uses relative or key-sequenced (non-TMF-protected) Enscribe files, then ULK’s intercept library can pick up changes to these files and ensure they get sent to Kafka.

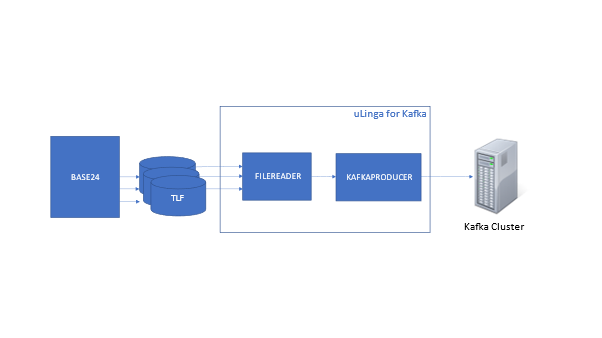

A common use case for this type of data streaming is to send application logs (such as a BASE24 Transaction Log File, or TLF) to Kafka. BASE24 usually has 2 or 3 TLFs active at any point in time; uLinga for Kafka supports this via FILEREADER configuration.

Applications can also stream data directly via uLinga for Kafka, without writing to an interim file or table on the NonStop. ULK supports a range of APIs so that applications can write to it, and through it, to Kafka. ULK’s IPC interface allows applications to write to a ULK process over $RECEIVE, allowing very straightforward integration and Kafka streaming. An example use case this scenario might be a home grown NonStop application that needs to send selective data to Kafka. That application could be simply modified to perform a Guardian WRITEREAD to uLinga for Kafka, providing the data to be streamed.

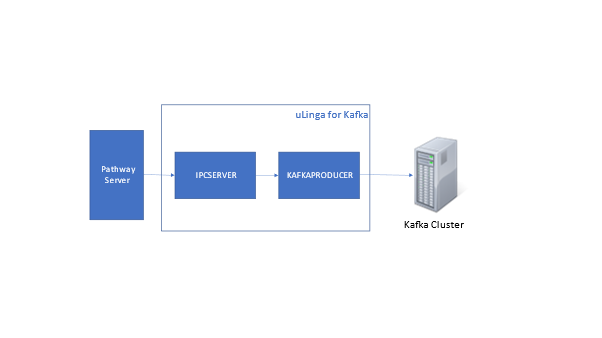

uLinga for Kafka’s Pathsend interface allows Pathsend clients (e.g Pathway requestors and servers) to natively communicate with ULK, and through ULK, to Kafka. This could be used, for instance, if a Pathway server wished to stream the result of a transaction to Kafka. The ULK Pathsend interface allows the Pathway server to do a simple SERVERCLASS_SEND_ containing the data to be streamed, and ULK takes it from there, ensuring the data is written to Kafka.

TCP/IP and HTTP interfaces mean that other types of application, including those not necessarily running on the HPE NonStop, can also communicate with ULK and stream to Kafka.

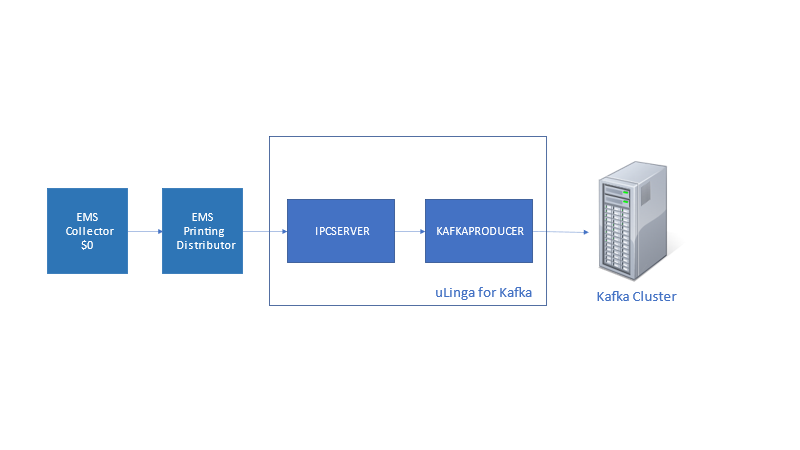

Log Activity/Tracker

On the HPE NonStop the main source of log data is the Event Management Service (EMS). EMS events can be fed to Kafka via uLinga for Kafka very simply. An EMS printing distributor can be configured to write data to uLinga for Kafka, and from there ULK streams those events directly to Kafka. The command required to start the EMS distributor would be something like:

TACL> EMSDIST /NOWAIT/ COLLECTOR $0, TYPE PRINTING, TEXTOUT $ULKAF

Where $ULKAF is the correctly configured uLinga for Kafka process. That’s it!

Message Queuing

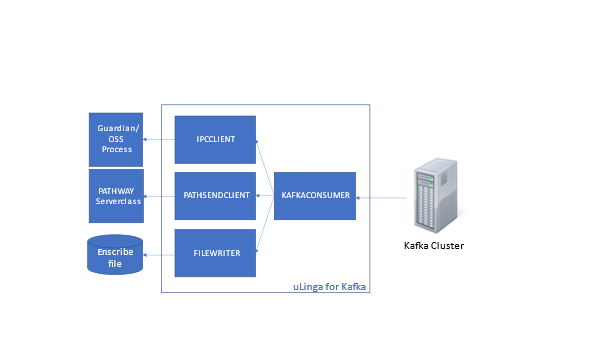

Kafka can be used for message queuing, and can provide a very high performance alternative to traditional message queuing solutions. On the NonStop, uLinga for Kafka can facilitate message queueing implementations, by virtue of the range of APIs it supports, as well as its “produce” and “consume” functionality.

One way message queuing, from NonStop to Kafka (and from their to other platforms and applications) can be achieved in much the same way as already outlined in “Data Streaming” above. One way message queuing from other applications to Kafka (and from there to NonStop) via uLinga for Kafka requires ULK’s consumer functionality. uLinga for Kafka can monitor one or more topics in a Kafka cluster, consuming (reading) new messages as they are posted to the topic. Once consumed, they can be presented to a NonStop application via a range of mechanisms – via an interim file, or directly presented via Guardian IPC, TCP/IP or HTTP communications. ULK’s consumer functionality looks like this:

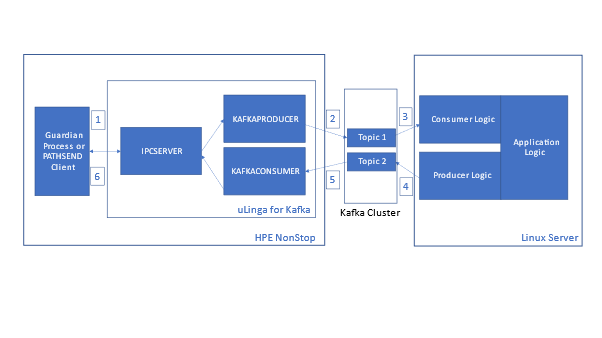

Two-way (or request-response) message queuing is possible via a combination of ULK’s produce and consume functionality. Messages can be sent to one Kafka topic, where they are consumed by a remote application, and a response returned via a separate Kafka topic. ULK logic correlates the response with the original request as needed to allow this functionality to be invoked via a Guardian WRITEREAD or Pathway SERVERCLASS_SEND_. The steps involved are covered below, assuming an application on a Linux server is responsible for processing the “request” and returning the “response”:

- Guardian application issues WRITEREAD/Pathway application issues SERVERCLASS_SEND_

- uLinga for Kafka PRODUCEs data from WRITEREAD/SERVERCLASS_SEND_ to Kafka, including correlation data (passed either in the Key or a custom header field)

- Linux Consumer logic retrieves data from Kafka, including correlation data, Linux application does some processing of data

- Linux Producer logic sends response as a Kafka PRODUCE, including original correlation data

- uLinga for Kafka CONSUMEs response, including correlation data

- uLinga for Kafka correlates response to original request and completes the WRITEREAD/SERVERCLASS_SEND_

There is also significant work going on in the Kafka community to provide further support for queueing with Kafka. Infrasoft is staying abreast of this work and will be ready to enhance uLinga for Kafka as needed to support these additional features. For those wanting to know more, see the Kafka Improvement Proposal (KIP) 932: Queues for Kafka.

Hopefully this article has given you some ideas of how you might use Kafka in your environment to get the most out of your NonStop applications and data. If you would like to discuss any of the use cases described here, or anything else related to NonStop-Kafka integration, please get in touch with us at productinfo@infrasoft.com.au.