Introduction

HPE NonStop users are becoming more familiar with Kafka, as Kafka in turn becomes more and more prevalent inside those large environments where the NonStop platform is often present. Kafka is being used by thousands of companies, including 60% of the Fortune 100.

These organizations use Kafka to manage “streams” of data, which have become more common as internet usage massively boosts the amount of data being generated and needing to be processed. Kafka allows these huge volumes of data to be processed in real-time, via a combination of “producers” and “consumers”, which work with a Kafka “cluster” – the main data repository.

Many HPE NonStop users need to integrate with Kafka in some way, be it to stream NonStop application data to Kafka, or integrate data from Kafka back into their NonStop applications. While there are Java-based solutions to send (PRODUCE) data to and receive (CONSUME) data from Kafka, these require OSS and may not perform at the level that NonStop users require.

uLinga for Kafka, which has had Kafka Producer functionality for over a year, has recently been enhanced to add Kafka Consumer functionality. This article explains how that functionality works and gives some potential use cases that NonStop users may find interesting.

uLinga for Kafka – Overview

With the latest release of uLinga for Kafka now available, Kafka Consumer functionality has been added bringing with it the same lightning-fast performance as the existing Producer functionality.

uLinga for Kafka as Kafka Consumer

Previous releases of uLinga for Kafka provide a range of options to stream NonStop data to Kafka, via uLinga’s Kafka Producer functionality. These features have been outlined in our previous Connection articles.

With the addition of Kafka Consumer functionality, uLinga now supports a range of options to stream Kafka data directly from the Kafka cluster, and apply that data to a NonStop database or send it directly to a NonStop application. .

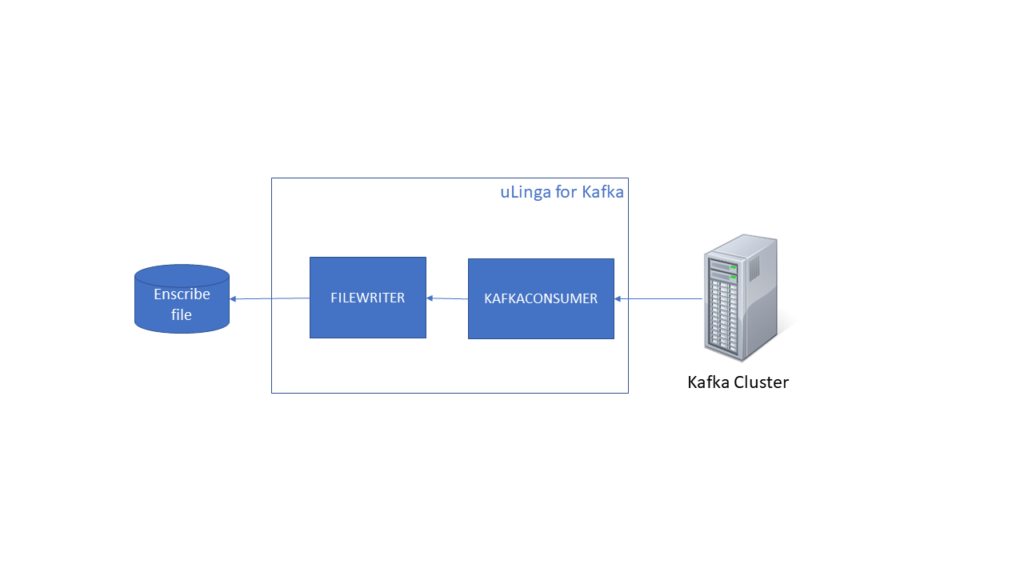

The diagram above depicts uLinga for Kafka acting as Kafka Consumer and writing the data consumed to an Enscribe file. This file could be used, for example, as a database refresh for a NonStop application. As events/records are streamed to the cluster by a remote producer, uLinga’s Consumer logic will retrieve those records, process them, and apply them to the Enscribe file. As with uLinga’s Producer functionality, very high throughput (greater than 20,000TPS) is possible, with sub-millisecond latency. This allows for support of the largest application requirements.

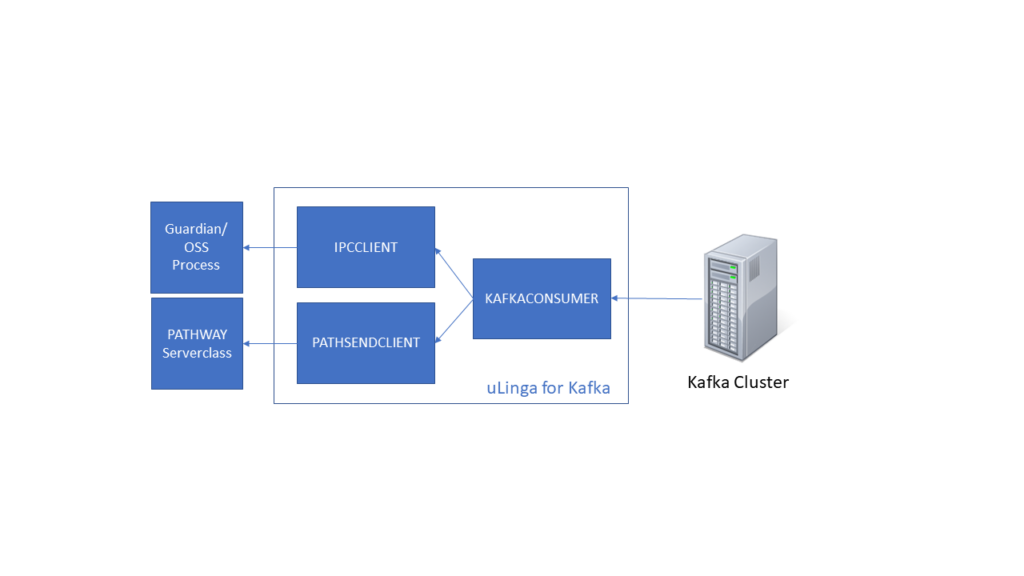

Figure 2 shows another use case for Kafka Consumer functionality. In this scenario uLinga for Kafka is forwarding consumed data directly to a NonStop application, either via a Guardian WRITE/WRITEREAD or a Pathway ERVERCLASS_SEND_ directly to a Pathway Serverclass. Once again this configuration supports very high throughput, allowing for any application load to be handled. This implementation might be used, for instance, to apply online updates to an application database.

As our previous Connection article outlined, uLinga for Kafka supports User Exits and these can be invoked in either of the above scenarios to support data manipulation or any other custom processing.

Using Kafka for Transactional Messaging

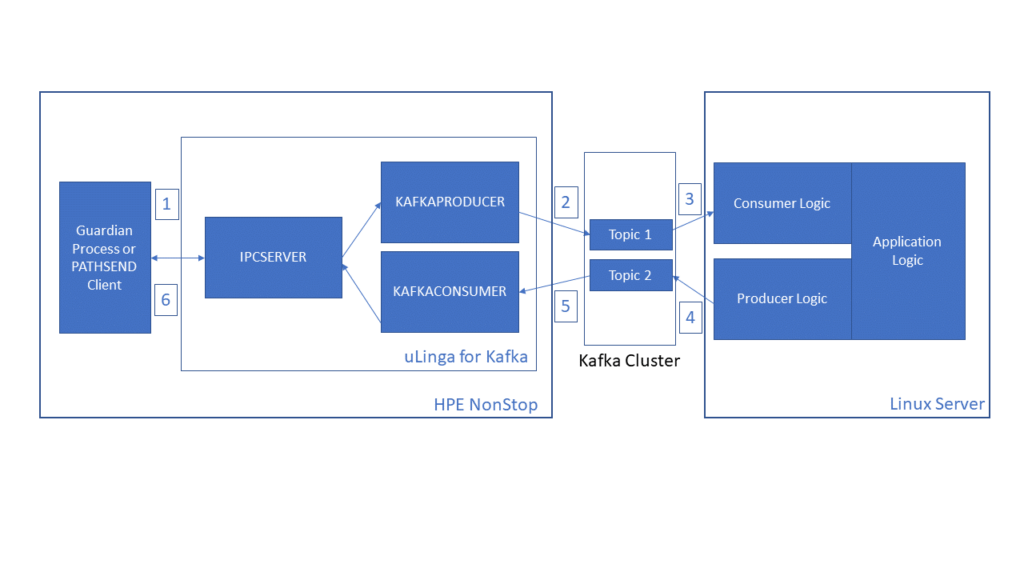

The addition of Consumer functionality to uLinga for Kafka opens up some interesting possibilities where Kafka can be used to handle large-scale transaction messaging. When a produced request message can be linked to a consumed response, you have a model for distributed transaction processing that looks quite similar to some message queuing solutions. Consider the following example configuration:

The following steps take place:

- Guardian application issues WRITEREAD/Pathway application issues SERVERCLASS_SEND_

- uLinga for Kafka PRODUCEs data from WRITEREAD/SERVERCLASS_SEND_ to Kafka, including correlation data (passed either in the Key or a custom header field)

- Linux Consumer logic retrieves data from Kafka, including correlation data, Linux application does some processing of data

- Linux Producer logic sends response as a Kafka PRODUCE, including original correlation data

- uLinga for Kafka CONSUMEs response, including correlation data

- uLinga for Kafka correlates response to original request and completes the WRITEREAD/SERVERCLASS_SEND_

This configuration provides a high-performance, reliable messaging mechanism between HPE NonStop and (in this example) a Linux/cloud-based application, utilising the extensive Kafka support already available to Linux applications. It is also an extremely simple method, from the point of view of the NonStop application, to communicate via Kafka – using a straightforward WRITEREAD or SERVERCLASS_SEND_.

Depending on specific application and environmental requirements, this approach may provide better clustering/fault-tolerance than some establishing queuing solutions. It is also likely to perform at least as well, if not considerably better, than most other alternatives. In particular, the low latency of this approach is impressive – initial testing by Infrasoft has seen overall latency as low as 1ms with this configuration.

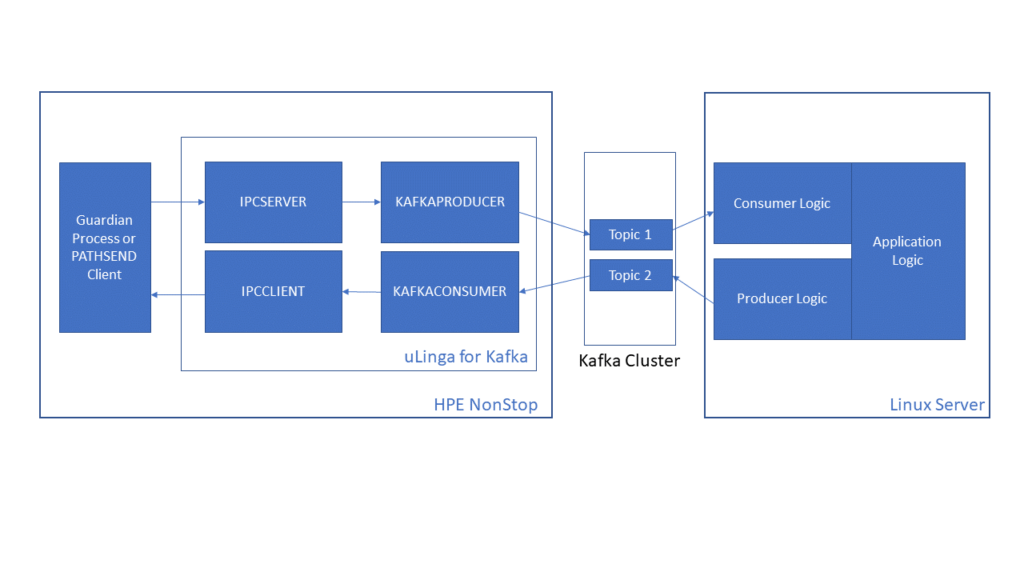

If there is a requirement for different “channels” for the request and response to be sent on, this can be achieved with a minor adjustment to the configuration depicted in Figure 3, and provides completely asynchronous request/response handling.

In this example, the NonStop application would handle context management between request and response messages.

Conclusion

uLinga for Kafka provides reliable, high performance options for integrating NonStop applications and data with Kafka. The addition of Kafka Consumer functionality gives customers the ability to stream data from Kafka and easily incorporate it with NonStop databases and applications. With uLinga for Kafka’s unique NonStop interprocess communication (IPC) support, customers have the ability to use Kafka in new ways, be it as a high-performance messaging hub, a feed for application updates or any number of additional use cases.

For more information on uLinga for Kafka, speak to our partners at comforte and TIC Software, or contact us at productinfo@infrasoft.com.au.